(If you are looking for some Jupyter notebooks, check the notebooks directory.)

Shortclips tutorial¶

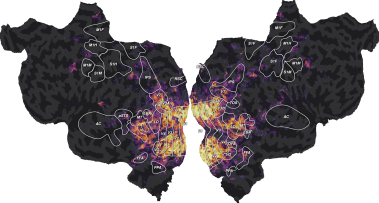

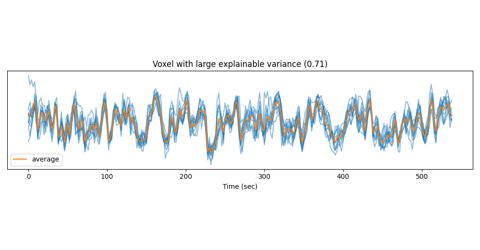

This tutorial describes how to perform voxelwise modeling on a visual imaging experiment.

Data set: This tutorial is based on publicly available data published on GIN [4b]. This data set contains BOLD fMRI responses in human subjects viewing a set of natural short movie clips. The functional data were collected in five subjects, in three sessions over three separate days for each subject. Details of the experiment are described in the original publication [4]. If you publish work using this data set, please cite the original publications [4], and the GIN data set [4b].

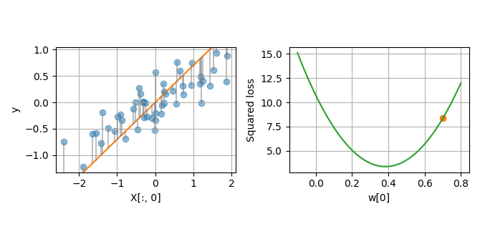

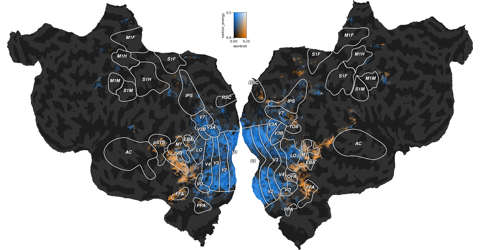

Models: This tutorial implements different voxelwise encoding models:

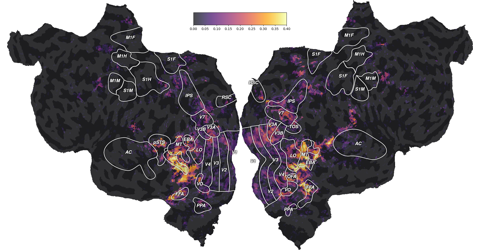

a ridge model with wordnet semantic features as described in [4].

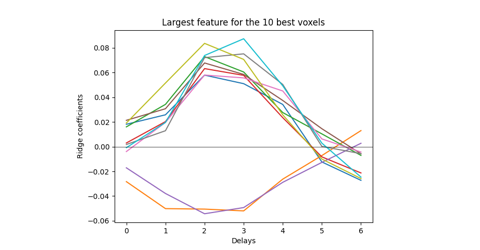

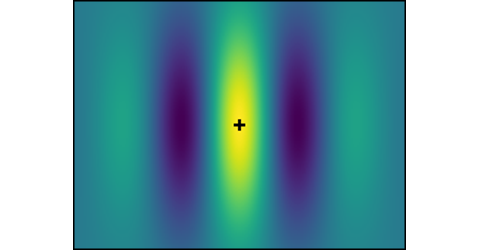

a ridge model with motion-energy features as described in [3].

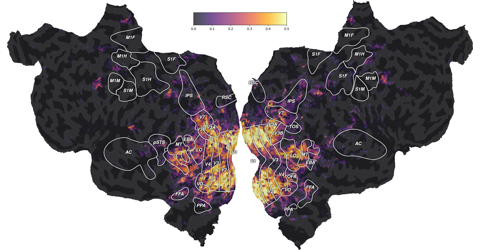

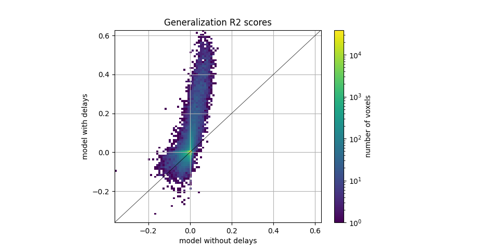

a banded-ridge model with both feature spaces as described in [12].

Scikit-learn API: These tutorials use scikit-learn to define the preprocessing steps, the modeling pipeline, and the cross-validation scheme. If you are not familiar with the scikit-learn API, we recommend the getting started guide. We also use a lot of the scikit-learn terminology, which is explained in great details in the glossary of common terms and API elements.

Running time: Most of these tutorials can be run in a reasonable time (under 1 minute for most examples, ~7 minutes for the banded ridge example) with a GPU backend in himalaya. Using a CPU backend is slower (typically 10 times slower).

Requirements: This tutorial requires the following Python packages:

voxelwise_tutorials (this repository) and its dependencies

cupy or pytorch (optional, to use a GPU backend in himalaya)

Gallery of scripts: Click on each thumbnail below to open the corresponding page:

Fit a banded ridge model with both wordnet and motion energy features

Vim-2 tutorial¶

Note

This tutorial is redundant with the “Shortclips tutorial”. It uses the “vim-2” data set, a data set with brain responses limited to the occipital lobe, and with no mappers to plot the data on flatmaps. Using the “Shortclips tutorial” with full brain responses is recommended.

This tutorial describes how to perform voxelwise modeling on a visual imaging experiment.

Data set: This tutorial is based on publicly available data published on CRCNS [3b]. The data is briefly described in the dataset description PDF, and in more details in the original publication [3]. If you publish work using this data set, please cite the original publication [3], and the CRCNS data set [3b].

Requirements: This tutorial requires the following Python packages:

voxelwise_tutorials (this repository) and its dependencies

cupy or pytorch (optional, to use a GPU backend in himalaya)

Gallery of scripts: Click on each thumbnail below to open the corresponding page: